WebRTC is used for adding video/audio streaming to the players sitting at the poker table.

Simple Peer over PeerJS

Simple Peer is used for the WebRTC connections.

I chose this library over PeerJS since the latter adds some custom behavior on top of WebRTC. In addition PeerJS seems to rely on running a PeerJS server (though I did not verify this). Nothing wrong with that, but since I wanted to write my WebSocket game server in Go, I didn’t want to run a separate server just for signaling.

The nice thing about Simple Peer is that it has a good level of abstraction which makes signaling and ice candidate exchanges very simple since the WebSocket server only needs to forward messages to the appropriate clients.

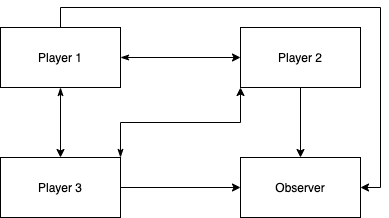

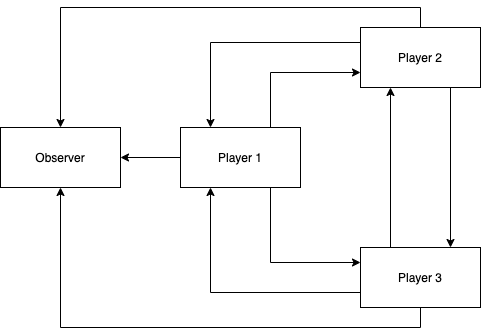

Modified peer-to-peer full-mesh topology

For simplicity and bandwidth savings, I did not use the SFU (selective forwarding unit) approach since that would require running a WebRTC server that would forward client streams.

With six players streaming audio/video to every connected client, it seemed like it was probably OK to take full-mesh topology approach if everyone had a reasonable internet connection. During some play testing, it worked fine.

Unfortunately the full-mesh topology did not work for me. This is due to a limitation in how Simple Peer works. If the client sends an offer with including an audio/video stream, then the client on the other side would not be able to send audio/video back since audio/video streaming has not been setup during signaling. If the offering client was sending a stream, then the other client would could use that same stream to send data back with renegotiating the connection since the streams can work bi-directionally.

The only way would to resolve the problem would be to renegotiate the WebRTC connection. In Simple Peer we would have to make the other client send the offer.

The problem was that the poker game allows observers, people who can watch the game and receive the audio/video streams, but will not be streaming audio/video themselves. If an observer joined the game first, they could potentially send an offer to one of the players. This player would not be able to send their stream since the observer is not sending a stream.

One option would be to make it so that the players would send out their video streams to all other players. The problem with this was that it could be confusing in the scenario where a player sends a stream to another player since it would be more efficient for that player to send their stream back using the same connection.

To clean that I up settled on the following approach:

- A player will send one-way audio/video streams to all players and observers

- This means that for player to player connections, there will be two connections

- One audio/video stream from player A to player B

- One audio/video stream from player B to player A

Signaling + ICE candidates

Simple Peer makes signaling very easy. All we need to do is forward the messages to the right client.

The signaling messages are forwarded using the existing WebSocket connection to the game server.

Backend

The backend has two events for signaling:

// WebRTC Signaling actions

const actionOnReceiveSignal string = "on-receive-signal"

const actionSendSignal string = "send-signal"

The frontend clients will send the send-signal event to the server. Then the server will

forward the message in send-signal to the appropriate recipient by sending an on-receive-signal

event.

The message payload includes the following data:

- peerID

- Client ID generated by game server (i.e. client to receive this message)

- streamID

- Generated on the client side as

{userID}-{uuid} - User ID is the Client ID of the sender

- If User ID matches the frontend client’s User ID, that means it’s the sender’s own stream

- Generated on the client side as

- signalData

- Data from Simple Peer

The backend logic is pretty simple:

// HandleSendSignal handles WebRTC signaling messages

func HandleSendSignal(c *Client, recipientID string, streamID string, signalData interface{}) error {

recipient, ok := c.hub.clients[recipientID]

if ok == false {

// Temporarily make this a noop until I can improve the error handling a bit

// return fmt.Errorf("Recipient userID (%s) does not exist", recipientID)

return nil

}

recipient.send <- createOnReceiveSignal(c.id, streamID, signalData)

return nil

}

func createOnReceiveSignal(peerID string, streamID string, signalData interface{}) Event {

return Event{

Action: actionOnReceiveSignal,

Params: map[string]interface{}{

"peerID": peerID,

"streamID": streamID,

"signalData": signalData,

},

}

}

Frontend

Most of the WebRTC logic resides in the frontend. The main requirement is managing the streams for all the different clients.

Basic recap of the requirements and logic:

- An observer will receive a read-only stream from any seated players

- A player will receive a read-only stream from any seated players

- Once seated, a player will send offers to each connected client

- Once a new client joins, seated players will send an offer to create a stream

- If an observer leaves, all of its streams will be closed

- If a player leaves, all of its streams will be closed (both sent and received)

App Store / Reducer

Streams are stored in the application store:

const initialState = {

chat: {

messages: [],

},

error: null,

gameState: null,

seatID: null,

streams: {}, // Connected streams, both sent/received (streamID -> {peer, peerID, stream, streamID})

streamSeatMap: {}, // So we know which stream to render at each seat (seatID -> ClientID)

userHoleCards: [null, null],

userID: null,

username: null,

userStream: null, // Local MediaStream

}

The reducer handles three actions which are self-explanatory:

case actionTypes.WEBRTC.REMOVE_STREAM:

return {

...state,

streams: update(state.streams, {$unset: [action.streamID]}),

}

case actionTypes.WEBRTC.SET_STREAM:

return {

...state,

streams: update(

state.streams, {

[action.streamID]: {$set: {

peer: action.peer,

peerID: action.peerID,

stream: action.stream,

streamID: action.streamID,

}},

},

),

}

case actionTypes.WEBRTC.SET_STREAM_SEAT_MAP:

return {

...state,

streamSeatMap: action.streamSeatMap,

}

Actions - sendSignal

This action is called on the Simple Peer signal event.

const sendSignal = (client, peerID, streamID, signalData) => {

client.send(JSON.stringify({

action: Event.SEND_SIGNAL,

params: {

peerID,

signalData,

streamID,

},

}))

}

Actions - receiveSignal

This action is called when the WebSocket server sends the on-receive-signal event.

If the signal is an offer, that means we are receiving a stream from a seated player. In this case we need to send back an answer to continue setting up the WebRTC connection.

If the signal is not an offer, it could be an answer or ICE candidate exchange. In this case we should have an existing stream, which we can retrieve using the streamID and peerID.

const onReceiveSignal = (dispatch, params, ws, appState) => {

if (params.signalData.type === "offer") {

const peer = initPeer(dispatch, ws, false, false, params.peerID, params.streamID)

peer.signal(params.signalData)

} else {

const stream = find(appState.streams, s => s.streamID === params.streamID && s.peerID === params.peerID)

if (stream) {

stream.peer.signal(params.signalData)

}

}

}

Actions - initPeer

The initPeer function is a helper function called by updatePeers and receiveSignal. It creates

a new Simple Peer object and sets up the event listeners which will dispatch to the main app

reducer.

Both sides need to create a Simple Peer object. The offering side is when the initiator is true and in our case will always include a MediaStream. The answering side is when the initiator is false.

The signal event triggers a sendSignal action as described earlier.

The stream event tell us a new stream has been received and we add that stream to the store so we

can render it in the game.

When the error and close events occur we need to clean up the streams.

const initPeer = (dispatch, ws, initiator, stream, peerID, streamID) => {

const peer = new Peer({initiator, stream})

dispatch({

type: actionTypes.WEBRTC.SET_STREAM,

peer,

peerID,

peerStream: null,

streamID,

})

peer.on('signal', data => {

sendSignal(ws, peerID, streamID, data)

})

peer.on('connect', () => {

console.log(`Stream (${streamID}) connected`)

})

peer.on('stream', peerStream => {

console.log(`Stream (${streamID}) added new stream`)

dispatch({

type: actionTypes.WEBRTC.SET_STREAM,

peer,

peerID,

stream: peerStream,

streamID,

})

})

peer.on('close', () => {

console.log(`Stream (${streamID}) disconnected`)

dispatch({

type: actionTypes.WEBRTC.REMOVE_STREAM,

streamID: streamID,

})

})

peer.on('error', err => {

console.log(`Stream (${streamID}) error: ${err}`)

dispatch({

type: actionTypes.WEBRTC.REMOVE_STREAM,

streamID: streamID,

})

})

return peer

}

Actions - updatePeers

The updatePeers function is the main function for handling the WebRTC connection logic.

This function is called when onTakeSeat or updateGame are called.

We call it on onTakeSeat since this is when a player first sits down at the table. This means

they need to send offers to all connected clients.

This is managed by the clientSeatMap which contains a client ID -> seat ID mapping, where seat ID can be

null if they are not seated. This is not to be confused with the streamSeatMap.

We need to call updatePeers on updateGame since new players can join the game at anytime, so

we need to keep checking the clientSeatMap for clients without a stream.

const updatePeers = (dispatch, ws, clientSeatMap, userID, seatID, userStream, streams) => {

if (!seatID || !userStream) {

return

}

keys(clientSeatMap).forEach(clientID => {

if (clientID === userID) {

return

}

const stream = find(streams, s => s.streamID.startsWith(userID) && s.peerID === clientID)

if (!stream) {

const streamID = `${userID}-${uuidv4()}`

initPeer(dispatch, ws, true, userStream, clientID, streamID)

}

})

}

Solving the delayed video rendering problem

One problem I had was that the video stream would not always render right away when a connection was established.

It would only show the video after performing an action, which triggered a re-render of the UI.

This was because initially I took the simple approach of using useRef and useEffect. The problem

is that setting up the initial useRef does not allow us to the set srcObject attribute at the same time.

This is because the video tag has not rendered yet. This is why the stream works when the component gets

re-rendered.

To solve this problem I ended up using the useState and useCallback approach. Unfortunately I

cannot remember why this approach works. My guess is that useCallback gets triggered when the video

ref is first set. And then setVideoRef gets called in the callback, which triggers a re-render, which is

what I was expecting with useRef and useEffect.

const [videoRef, setVideoRef] = useState(null)

const onSetVideoRef = useCallback(videoRef => {

setVideoRef(videoRef)

if (videoRef) {

videoRef.srcObject = stream

}

}, [stream])

if (videoRef) {

if (seatID === player.id) {

videoRef.muted = true

} else {

videoRef.muted = player.muted

}

}

Then later:

<video className="shadow-lg" ref={onSetVideoRef} autoPlay />

Improvements

The WebRTC setup works well, but there are some improvements that can be made.

Mapping client IDs to stream IDs to seat IDs

Right now the process of determining which stream belongs to who is a convoluted. We need to perform a quite bit of translation to map streams to the correct seat. But this is mainly an issue with how the main game logic is designed.

Avoiding race conditions

Due to the asynchronous nature of how javascript events work, it is possible that the game server

will send onTakeSeat before updateGame. Since we don’t know which will be called when, we need to

send the clientSeatMap with the onTakeSeat event. Normally updateGame will send the clientSeatMap.

One reason we call updatePeers on every updateGame event is to ensure any missed events

will be retried. For example we could send an event when a player joins the game, but this would

have the same race condition as onTakeSeat. We’d need to send the clientSeatMap. And it

could be possible to miss the event. Also the game may not have started yet.

Using video to canvas stream approach.

One issue with local MediaStreams is that you cannot control the resolution. So if someone is playing the game from their phone, their video stream will be in portrait view which looks weird.

The workaround for this is to stream the video to a hidden canvas. Then you can crop the video to different dimensions and lower the resolution. This is the strategy I am using in Too Many Cooks.

Then you can call canvas.captureStream() to receive a MediaStream object. You will need to add

the audio track to this MediaStream. Then this can be passed into Simple Peer.

The canvas will need to be refreshed constantly using requestAnimationFrame.

Repository

The Github repository can be found here: